Trust graphs and Generative AI

tl;dr: we need to start placing a hefty amount of distrust on any text, photos, audio and videos by default. This requires updating the existing chains of trust. This is an unsolved problem as of yet, but likely requires rethinking how we consume information.

The world is built on trust, in the sense that we (a) need to collaborate with others in order to survive and (b) can't verify all facts, so we have to take some shortcuts and to believe things just because someone else said so. In fact, the majority of what we believe is not inferred from first principles, but rather based on trusting the people around us.

This gets significantly more complicated online, after the advent of generative text, photo, sound and video models like Stable Diffusion and ChatGPT. This note will dive a bit into how that can look like.

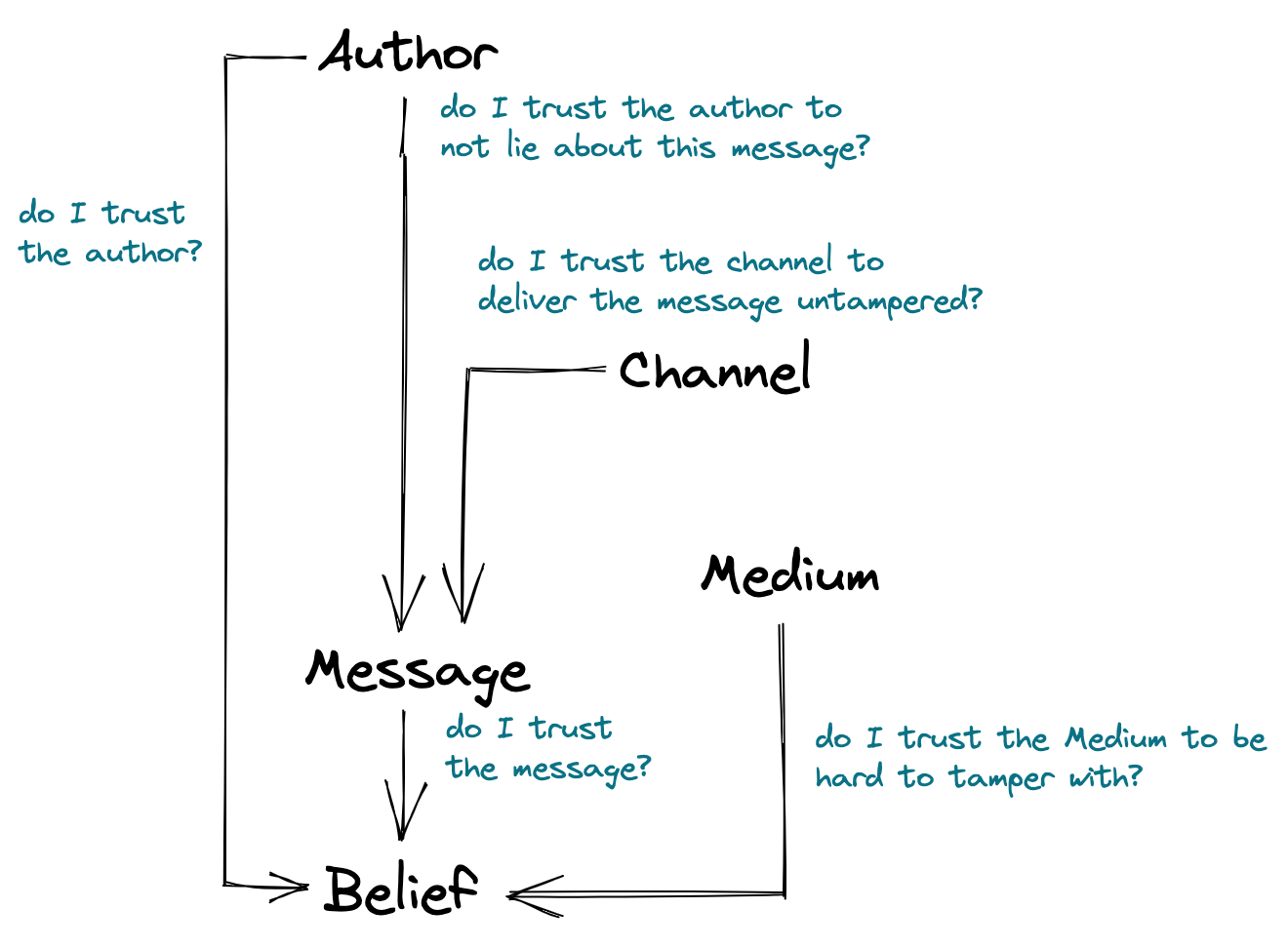

I'll give a few progressive examples of how trust is established, along with how it evolves over time. We’ll start very basic to identify some of the basic elements of trust, then go deeper into the weeds.

Simple example

You are just about to head out, when your partner says it's raining outside so you should get an umbrella. You update your beliefs based on a few inputs:

Inputs:

Your partner: is trustworthy. Being trustworthy in this context includes both not lying and not making honest mistakes.

The message: rain is not unusual this time of year.

Output:

You now believe that it is indeed raining outside, despite not verifying the information yourself.

I would argue that the majority of beliefs are constructed like this. Digging deeper, your new belief is based on already existing beliefs which were constructed in the same way:

Your partner:

is generally trustworthy because in the past they have not lied to you.

they're also trustworthy about the weather because they have no reason to lie about it.

The message is likely to be true because:

you've observed rain many times before at this time of year — this is actually the only part of your new belief that is rooted in personal experience.

… but you also remember all the people moaning about the weather, bleak weather forecasts, etc.

So the new belief can be described like this:

The channel

BBC says on their website that Rishi Sunak is the new Prime Minister of the UK.

Updating your beliefs now requires a few more inputs:

The author: the BBC is generally a trustworthy organisation, especially when it comes to reporting factual breaking news.

The medium: now you also have to take into consideration how the message got to you.

Let's assume that the website now seems to have a different design and you also notice some spelling errors. A reasonable conclusion might be that you've mistyped the address or maybe their website got hacked; this casts a lot of doubts on whether the news are true.

The message: based on your prior beliefs, and despite never attending a Parliament session or meeting him in person, you do accept that Rishi Sunak is a UK high ranking politician from the conservative party. Despite not being there, you do accept that the former UK PM has recently resigned. And despite not having personally read all the rules governing the UK, you do believe that a new PM will be chosen soon.

So on the whole you might have doubts about the truth of the story. And perhaps you might Google “is BBC hacked”, or look for similar news stories on other news websites. This implies using your trust in other entities like Google and the Financial Times. At a deeper level you have to trust your phone, your browser, underlying Internet infrastructure etc. to not be tampered with.

This means that a lot of your beliefs hinge on your trust in various communication channels, and this has evolved at a huge pace in the past few hundred years:

Initially, spoken words were everything. So trust hinged on the trustworthiness of the individual speaking, the trustworthiness of the message as delivered by them (e.g. delivering someone else's opinion vs. something that you've witnessed yourself), and the trustworthiness of the message itself. In other words, exactly like the simple example above.

Then, people started to write. This lent a great deal of trust to written words, because the only people who knew how to write were well educated people. So the fact that a message was written down in a book actually might have increased the likelihood that it would be trusted (bias notwithstanding), a priori.

The printing press changed things, since the cost of producing copies of a written message went down significantly, which decreased the cost for bad actors to lie; inherently, printed words had intrinsically lower trust attached to them.

The internet had two phases:

Initially, the only people who had access to it were a handful of well intended researchers, so trust was high.

Then it gradually eroded as waves of regular folks, including bad actors, started having access.

Internet platforms follow the same path, where waves of early adopters get overwhelmed by regular people as well as bad actors who have incentives to do malicious things. This is one of the reasons why people pine for the “good old days” of IRC / Facebook / Hacker News / Reddit etc.

The medium

A random Twitter account says someone has just shot the President of the US; a few minutes later they post a photo; and then a video.

Separate from the channel ("where” the message was sent), the medium is also increasingly important these days, which can range from text, photos, audio, video, and they generally follows a spectrum of inherent trustworthiness; a video you see on the internet is inherently more trustworthy than a piece of text describing the same thing.

This is because of how different mediums can be exploited:

Text: anyone can write anything, generally, but there are a few things which are costly to fake:

High quality arguments, and things that can trivially be tested by other means,

Volume and interaction — more text takes longer to write, and a chain of replies generally requires a human in the loop.

Photo: can be manipulated, but generally requires a lot of manual effort, so typically the changes are either made to look misleading, or otherwise trivial changes. This also means that having N photos of an event from various angles lends more trust than a single one.

Video: very hard to fake unless you hire a whole crew of actors, special effects etc. depending on the scenario. Live video where the subject can interact with e.g. a list of video call participants, is also very trustworthy as a result.

At the moment a great deal of trust is based on the medium; having a description of something happening is less trustworthy than a photo of the same thing, which is less trustworthy than a video of it.

Deepfakes

The explosion in deepfake progress changes things significantly, with regards to the inherent trust attached to different mediums; the cost attached to various scenarios is decreasing significantly:

Text:

Arbitrary amounts can be generated via models like GPT-3 and ChatGPT. They tend to be very good at faking humans by using convincing arguments, being able to follow a narrative, back and forth communication etc.

You could always hire a bunch of people to automate text (e.g. automated recruiter emails), but it has now been commoditised to an incredible degree, and you can generate huge amounts of customised for ~free.

Photo:

Trivial manipulations have been possible since the start of photography, and more complex ones since the advent of Photoshop. But these have been costly and generally done as a one-off.

Models like Stable Diffusion now make it trivial to generate arbitrary numbers of fictional photographs of an event, like selfies from the French Revolution.

So far, deepfakes have been possible to detect by looking for a few things, which is good news for now:

Lack of photorealism,

Unable to depict an arbitrary person in multiple poses (as opposed to celebrities),

Known weaknesses and artifacts like wrong number of fingers on a hand,

Text being mangled up.

But all of those are very quickly going away; it’s foolish to think that models released only a few months ago will always stay like this with no improvement whatsoever; especially given the neck-breaking pace of progress in the field at the moment.

Audio

Generating an arbitrary voice has been easy for a while.

Intetracting with an arbitrary voice in realtime was harder; cloning a particular person’s voice harder still.

But both of these are going away, so if you get a phone call from a loved one, the chances are you won’t be talking to a real person.

Video:

The hardest to tamper with for now, especially live video.

But advances are made incredibly quickly, for example:

Runway ML for video generation.

Gaussian Splatting, which allows for arbitrary photorealistic synthesis of a 3D scene.

Various advances in speech synthesis, including faking a particular person's voice.

We are likely not far away from being able to create arbitrary video (and arbitrary amounts of video) just like text.

All of this means that if something is on a screen (as opposed to in person), it will default to not being trustworthy.

So how can the world continue to function?

I'm not sure — I'm writing this note to process my thoughts. But it feels to me that:

Trust is absolutely necessary for the functioning of any society; there is no other way. We must improve things. I believe the incredible AI advances are going to be hugely beneficial and positive to us humans, but they will also cause a few huge issues to deal with as well, as described above. And we need to get ahead of these changes.

If a particular node in the trust graph is by default compromised (i.e. the medium, given advances is Generative AI), it likely means that we need to rely more on trusting the other nodes, i.e. to better verify who sends messages, where they send them, as well as the messages themselves.

If we change the reliance on various nodes in this graph, online interactions have to change in a few ways:

Placing more trust in the author of a message requires updating the way we build beliefs. For example, big organisations of any kind, including and especially mass media, will be trusted less; this is not because they'd be less trustworthy than they are today, but because the messages they'd send could not be trusted. For example, the BBC showing a video of the President of the US being shot would require also trusting the the BBC have not themselves been duped; this is not needed today.

If I were to guess, I'd say that entities which have ambiguous amounts of trust today will become less trustworthy, and entities which benefit from huge amounts of trust — like people you know in real life — will become more so. This likely includes influencers, for certain niches of topics.

I would suggest that personal reputation among a set of trusted peers will become more and more important.Placing more trust in the medium (text, audio etc.) requires a fairly advanced level of tech literacy, like knowing how to verify cryptographic keys. This is not happening at scale any time soon, but more focus on end to end messaging encryption is likely a side effect.

Placing more trust in the message is hard; it requires either arbitrary amounts of time and money to spend on verifying facts, and/or arbitrary amounts of critical thinking to process messages. I believe this is fundamentally not solvable.

Something that is solvable though, is adding more nodes to the chain of trust, to authenticate the message, similar to fact checking.

I would be pleasantly surprised if e.g. camera manufacturers start providing authentication methods for photos/videos taken with their devices.

Constructing additional nodes: I would expect this to happen either by adding additional trusted entities to vet messages, or by additional tools to improve trust in existing nodes (e.g. end to end encryption).

Outside a select few, I fully expect the vast majority of people to take a very long time to update their world view in light of recent AI progress, and to repeatedly fail and be exploited by less well meaning individuals and organisations.

One thing is certain though: as I write this, it’s been only a few months since Stable Diffusion and ChatGPT came out. The pace of progress is wild, and it will disrupt online interaction. I also believe we are not prepared for it at all.